The year is 1997. A young boy is about to watch a porn video for the first time on a grainy VHS tape. An older version of himself from the far off year of 2025 appears.

Me: “You know, in the future, you’ll make your own porn videos.”

90s me: “Wow, you mean I’ll get to have lots of hot sex!?!?”

Me: “Ha! No. So Nvidia will release this system called CUDA…”

I thought this was going to go Watchmen for a moment. Like…

It is 1997, I am a young boy, I am jerking off to a grainy porno playing over stolen cinemax.

It is 2007, i am in my dorm, i am jerking off to a forum thread full of hi-res porno.

It is 2027, i am jerking off to an ai porno stream that mutates to my desires in real time. I am about to nut so hard that it shatters my perception of time.

The AI camera will still zoom in on the guys nuts as you’re about to bust tho.

Why ask it for gay porn if it doesn’t?

Where were you in 2017?

I figured rule of threes meant it was funnier to leave it out. 2017 would have been sad gooning to pornhub during the first trump nightmare.

Then 2027 could be sad gooning to ai hyperporn during the second trump nightmare.

Maybe I should have used 20 year jumps, but "2037, I am jerking off because there’s no food, and the internet is nothing but ai porn.’ didn’t seem as funny a point for the “time shattering” bit.

Look it was a long thread okay.

Went to college

You nut so hard in 2027 it teleports you back to 1997?

Then another company called Deepseek will release a system called low level programming that replaces CUDA.

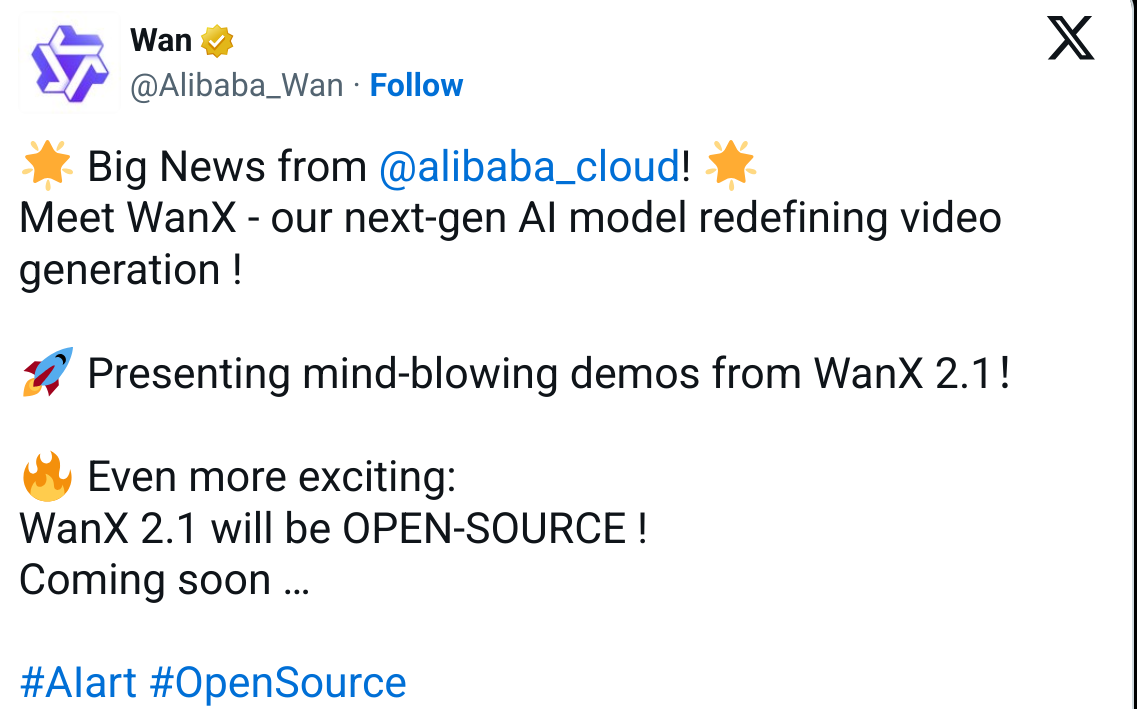

It’s super funny to me that they named it “WanX” haha

Kudos to the English speaking guy on the team who pushed that under the radar. That has got to be intentional.

it fulfilled its name mandate %100

mind-blowing

Hmm, it’s certainly blowing something.

I am going to make this statement openly on the Internet. Feel free to make AI generated porn of me as long as it involves adults. Nobody is going to believe that a video of me getting railed by a pink wolf furry is real. Everyone knows I’m not that lucky.

Fortunately, most of my family is so tech illiterate that even if a real video got out, I could just tell them it’s a deepfake and they’d probably believe me.

Does the wolf need to be an adult in human years or dog years?

i think that it should probably be capable of consent, that would be my guess.

I mean, I think he said it’s a pink wolf furry, so you’re probably good if he’s the one penetrating. If it was a pink furry wolf, on the other hand…

i believe that is what they said, that is a pretty good chance, but still good to be on the safe side i suppose.

Asking the important questions…

First off, I am sex positive, pro porn, pro sex work, and don’t believe sex work should be shameful, and that there is nothing wrong about buying intimacy from a willing seller.

That said. The current state of the industry and the conditions for many professionals raises serious ethical issues. Coercion being the biggest issue.

I am torn about AI porn. On one hand it can produce porn without suffering, on the other hand it might be trained on other peoples work and take peoples jobs.

I think another major point to consider going forward is if it is problematic if people can generate all sorts of illegal stuff. If it is AI generated it is a victimless crime, so should it be illegal? I personally feel uncomfortable with the thought of several things being legal, but I can’t logically argue for it being illegal without a victim.

i have no problem with ai porn assuming it’s not based on any real identities, i think that should be considered identity theft or impersonation or something.

Outside of that, it’s more complicated, but i don’t think it’s a net negative, people will still thrive in the porn industry, it’s been around since it’s been possible, i don’t see why it wouldn’t continue.

Identity theft only makes sense for businesses. I can sketch naked Johny Depp in my sketchbook and do whatever I want with it and no one can stop me. Why should an AI tool be any different if distribution is not involved?

revenge porn, simple as. Creating fake revenge porn of real people is still to some degree revenge porn, and i would argue stealing someones identity/impersonation.

To be clear, you’re example is a sketch of johnny depp, i’m talking about a video of a person that resembles the likeness of another person, where the entire video is manufactured. Those are fundamentally, two different things.

Again you’re talking about distribution

sort of. There are arguments that private ownership of these videos is also weird and shitty, however i think impersonation and identity theft are going to the two most broadly applicable instances of relevant law here. Otherwise i can see issues cropping up.

Other people do not have any inherent rights to your likeness, you should not simply be able to pretend to be someone else. That’s considered identity theft/fraud when we do it with legally identifying papers, it’s a similar case here i think.

But the thing is it’s not a relevant law here at all as nothing is being distributed and no one is being harmed. Would you say the same thing if AI is not involved? Sure it can be creepy and weird and whatnot but it’s not inhertly harmful or at least it’s not obvious how it would be.

the only perceivable reason to create these videos is either for private consumption, in which case, who gives a fuck. Or for public distribution, otherwise you wouldn’t create them. And you’d have to be a bit of a weird breed to create AI porn of specific people for private consumption.

If AI isn’t involved, the same general principles would apply, except it might include more people now.

I guess the point is this enables the mass production of revenge porn essentially at a person on the street level which makes it much harder to punish and prevent distribution. when it is relatively few sources that produces the unwanted product then only punishing the distribution might be a viable method. But when the production method becomes available to the masses then the only feasible control mechanism is to try to regulate the production method. It is all a matter of where is the most efficient position to put the bottle neck.

For instance when 3D printing allows people to produce automatic rifles in their homes “saying civil use of automatic rifles is illegal so that is fine” is useless.

I think that’s a fair point and I wonder how will this effect the freedom of expression on the internet. If you can’t find the distributor then it’ll be really tough to get a handle of this.

On the other hand the sheer over abundance could simply break the entire value of revenge porn as in “nothing is real anyway so it doesn’t matter” sort of thing which I hope would be the case. No one will be watching revenge porn cause they generate any porn they want in a heartbeat. Thats the ideal scenario anyway.

It is indeed a complicated problem with many intertwined variables, wouldn’t wanna be in the shoes of policy makers (assuming that they actually are searching for an honest solution and not trying to turn this into profit lol).

For instance too much regulation on fields like this essentially would kill high quality open source AI tools and make most of them proprietary software leaving the field in the mercy of tech monopolies. This is probably what these monopolies want and they will surely try to push things this way to kill competition (talk about capitalism spurring competition and innovation!). They might even don the cloak of some of these bad actors to speed up the process. Given the possible application range of AI, this is probably even more dangerous than flooding the internet with revenge porn.

%100 freedom, no regulations will essentially lead to a mixed situation of creative and possibly ground breaking uses of the tech vs many bad actors using the tech for things like scamming, disinformation etc. how it will balance out on the long run is probably very hard to predict.

I think two things are clear, 1-both extremities are not ideal, 2- between the two extremities %100 freedom is still the better option (the former just exchanges many small bad actors for a couple giant bad actors and chokes any possible good outcomes).

Based on these starting with a solution closer to the “freedom edge” and improving it step by step based on results is probably the most sensible approach.

Refresh for a new fake person

this ones a classic.

I’ve found that there’s a lot of things on the Internet that went wrong because it was ad supported for “free”. Porn is one of them.

There is ethically produced porn out there, but you’re going to have to pay for it. Incidentally, it also tends to be better porn overall. The versions of the videos they put up on tube sites are usually cut down, and are only part of their complete library. Up through 2012 or so, the tube sites were mostly pirated content, but then they came to an agreement with the mainstream porn industry. Now it’s mostly the studios putting up their own content (plus independent, verified creators), and anything pirated gets taken down fast.

Anyway, sites like Crash Pad Series, Erika Lust, Vanessa Cliff, and Adulttime (the most mainstream of this list) are worth a subscription fee.

without a victim

It was trained on something.

It can generate combinations of things that it is not trained on, so not necessarily a victim. But of course there might be something in there, I won’t deny that.

However the act of generating something does not create a new victim unless there is someones likeness and it is shared? Or is there something ethical here, that I am missing?

(Yes, all current AI is basically collective piracy of everyones IP, but besides that)

Watching videos of rape doesn’t create a new victim. But we consider it additional abuse of an existing victim.

So take that video and modify it a bit. Color correct or something. That’s still abuse, right?

So the question is, at what point in modifying the video does it become not abuse? When you can’t recognize the person? But I think simply blurring the face wouldn’t suffice. So when?

That’s the gray area. AI is trained on images of abuse (we know it’s in there somewhere). So at what point can we say the modified images are okay because the abused person has been removed enough from the data?

I can’t make that call. And because I can’t make that call, I can’t support the concept.

I mean, there’s another side to this.

Assume you have exacting control of training data. You give it consensual sexual play, including rough play, bdsm play, and cnc play. We are 100% certain the content is consensual in this hypothetical.

Is the output a grey area, even if it seems like real rape?

Now another hypothetical. A person closes their eyes and imagines raping someone. “Real” rape. Is that a grey area?

Let’s build on that. Let’s say this person is a talented artist, and they draw out their imagined rape scene, which we are 100% certain is a non-consensual scene imagined by the artist. Is this a grey area?

We can build on that further. What if they take the time to animate this scene? Is that a grey area?

When does the above cross into a problem? Is it the AI making something that seems like rape but is built on consensual content? The thought of a person imagining a real rape? The putting of that thought onto a still image? The animating?

Or is it none of them?

Is the output a grey area, even if it seems like real rape?

on a base semantic and mechanic level, no, not at all. They aren’t real people, there aren’t any victims involved, and there aren’t any perpetrators. You might even be able to argue the opposite, that this is actually a net positive, because it prevents people from consuming real abuse.

Now another hypothetical. A person closes their eyes and imagines raping someone. “Real” rape. Is that a grey area?

until you can either publicly display yours, or someone else process of thought, or read peoples minds, definitionally, this is an impossible question to answer. So the default is no, because it’s not possible to be based in any frame of reality.

Let’s build on that. Let’s say this person is a talented artist, and they draw out their imagined rape scene, which we are 100% certain is a non-consensual scene imagined by the artist. Is this a grey area?

assuming it depicts no real persons or identities, no, there is nothing necessarily wrong about this, in fact i would defer back to the first answer for this one.

We can build on that further. What if they take the time to animate this scene? Is that a grey area?

this is the same as the previous question, media format makes no difference, it’s telling the same story.

When does the above cross into a problem?

most people would argue, and i think precedent would probably agree, that this would start to be a problem when explicit external influences are a part of the motivation, rather than an explicitly internally motivated process. There is necessarily a morality line that must be crossed to become a more negative thing, than it is a positive thing. The question is how to define that line in regards to AI.

We already allow simulated rape in tv and movies. AI simply allows a more graphical portrayal.

Consensual training data makes it ok. I think AI companies should be accountable for curating inputs.

Any art is ok as long as the artist consents. Even if they’re drawing horrible things, it’s just a drawing.

Now the real question is, should we include rapes of people who have died and have no family? Because then you can’t even argue increased suffering of the victim.

But maybe this just gets solved by curation and the “don’t be a dick” rule. Because the above sounds kinda dickish.

I see the issue with how much of a crime is enough for it to be okay, and the gray area. I can’t make that call either, but I kinda disagree with the black and white conclusion. I don’t need something to be perfectly ethical, few things are. I do however want to act in a ethical manner, and strive to be better.

Where do you draw the line? It sounds like you mean no AI can be used in any cases, unless all the material has been carefully vetted?

I highly doubt there isn’t illegal content in most AI models of any size by big tech.

I am not sure where I draw the line, but I do want to use AI services, but not for porn though.

It just means I don’t use AI to create porn. I figure that’s as good as it gets.

With this logic, any output of any pic gen AI is abuse… I mean, we can 100% be sure that there are CP in training data (it would be a very bug surprise if not) and all output is result of all training data as far as I understand the statistical behaviour of photo gen AI.

We could be sure of it if AI curated it’s inputs, which really isn’t too much to ask.

Well AI is by design not able to curate its training data, but companies training the models would in theory be able to. But it is not feasible to sanitise this huge stack of data.

There is no ethical consumption while living a capitalist way of life.

ML always there to say irrelevant things

😆as if this has something to do with that

But to your argument: It is perfectly possible to tune capitalism using laws to get veerry social.

I mean every “actually existing communist country” is in its core still a capitalist system, or how you argue against that?

It’s not just AI that can create content like that though. 3d artists have been making victimless rape slop of your vidya waifu for well over a decade now.

Yeah, I’m ok with that.

AI doesn’t create, it modifies. You might argue that humans are the same, but I think that’d be a dismal view of human creativity. But then we’re getting weirdly philosophical.

Watching videos of rape doesn’t create a new victim. But we consider it additional abuse of an existing victim.

is this a legal thing? I’m not familiar with the laws surrounding sexual abuse, on account of the fact that i don’t frequently sexually abuse people, but if this is an established legal precedent that’s definitely a good argument to use.

However, on a mechanical level. A recounting of an instance isn’t necessarily a 1:1 retelling of that instance. A video of rape for example, isn’t abuse anymore so than the act of rape within it, and of course the nonconsensual recording and sharing of it (because it’s rape) distribution of that could necessarily be considered a crime of it’s own, same with possession, however interacting with the video i’m not sure is necessarily abuse in it’s own right, based on semantics. The video most certainly contains abuse, the watcher of the video may or may not like that, i’m not sure whether or that should influence that, because that’s an external value. Something like “X person thought about raping Y person, and got off to it” would also be abuse under the same pretense at a certain point. There is certainly some interesting nuance here.

If i watch someone murder someone else, at what point do i become an accomplice to murder, rather than an additional victim in the chain. That’s the sort of litmus test this is going to require.

That’s the gray area. AI is trained on images of abuse (we know it’s in there somewhere).

to be clear, this would be a statistically minimal amount of abuse, the vast majority of adult content is going to be legally produced and sanctioned, made public by the creators of those videos for the purposes of generating revenue. I guess the real question here, is what percent of X is still considered to be “original” enough to count as the same thing.

Like we’re talking probably less than 1% of all public porn, but a significant margin, is non consensual (we will use this as the base) and the AI is trained on this set, to produce a minimally alike, or entirely distinct image from the feature set provided. So you could theoretically create a formula to determine how far removed you are from the original content in 1% of cases. I would imagine this is going to be a lot closer to 0 than it is to any significant number, unless you start including external factors, like intentionally deepfaking someone into it for example. That would be my primary concern.

That’s the gray area. AI is trained on images of abuse (we know it’s in there somewhere). So at what point can we say the modified images are okay because the abused person has been removed enough from the data?

another important concept here is human behavior as it’s conceptually similar in concept to the AI in question, there are clear strict laws regarding most of these things in real life, but we aren’t talking about real life. What if i had someone in my family, who got raped at some point in their life, and this has happened to several other members of my family, or friends of mine, and i decide to write a book, loosely based on the experiences of these individuals (this isn’t necessarily going to be based on those instances for example, however it will most certainly be influenced by them)

There’s a hugely complex hugely messy set of questions, and answers that need to be given about this. A lot of people are operating on a set of principles much too simple to be able to make any conclusive judgement about this sort of thing. Which is why this kind of discussion is ultimately important.

It can generate combinations of things that it is not trained on, so not necessarily a victim. But of course there might be something in there, I won’t deny that.

the downlow of it is quite simple, if the content is public, available for anybody to consume, and copyright permits it (i don’t see why it shouldn’t in most cases, although if you make porn for money, you probably hold exclusive rights to it, and you probably have a decent position to begin from, though a lengthy uphill battle nonetheless.) there’s not really an argument against that. The biggest problem is identity theft and impersonation, more so than stealing work.

yeah bro wait until you discover where neural networks got that idea from

without a victim

You are wrong.

AI media models has to be trained on real media. The illegal content would mean illegal media and benefiting ,supporting, & profiting from and to victims of crime.

The lengths and fallacies pedophiles will go to justify themselves is absurd.

Excuse me? I am very offended by your insinuations here. It honestly makes me not want to share my thought and opinions at all. I am not in any way interested in this kind of content.

I encourage you to read my other posts in the different threads here and see. I am not an apologist, and do not condone it either.

I do genuinely believe AI can generate content it is not trained on, that’s why I claimed it can generate illegal content without a victim. Because it can combine stuff from things it is trained on and end up with something original.

I am interested in learning and discussing the consequences of an emerging and novel technology on society. This is a part of that. Even if it is uncomfortable to discuss.

You made me wish I didn’t…

Don’t pay any attention to that kinda stupid comment. Anyone posting that kind of misinformation about AI is either trolling or incapable of understanding how generative AI works.

You are right it is a victimless crime (for the creation of content). I could create porn with minions without using real minion porn to put the randomnest example I could think of. There’s the whole defamation thing of publishing content without someone’s permission but that I feel is a discussion irrelevant of AI (we could already create nasty images of someone before AI, AI just makes it easier). But using such content for personal use… It is victimless. I have a hard time thinking against it. Would availability of AI created content with unethical themes allow people to get that out of their system without creating victims? Would that make the far riskier and horrible business of creating illegal content with real unwilful people disappear? Or at the very least much more uncommon? Or would make people more willing to consume thw content creating a feelibg of fake safety towards content previously illegal? There’s a lot of implications that we should really be thinking about and how it would affect society, for better or worse…

Don’t pay any attention to that kinda stupid comment. Anyone posting that kind of misinformation about AI is either trolling or incapable of understanding how generative AI works

Thank you 😊

Whats illegal in real porn should be illegal in AI porn, since eventually we won’t know whether it’s AI

That’s the same as saying we shouldn’t be able to make videos with murder in them because there is no way to tell if they’re real or not.

That’s a good point, but there’s much less of a market for murder video industry

I mean, a lot of TV has murders in it. There is a huge market for showing realistic murder.

But I get the feeling your saying that there isn’t a huge market for showing real people dying realistically without their permission. But that’s more a technicality. The question is, is the content or the production of the content illegal. If it’s not a real person, who is the victim of the crime.

Yeah the latter. Also murder in films for the most part is for storytelling. It’s not murder simulations for serial killers to get off to, you know what I mean?

Good.

Hot take but I think AI porn will be revolutionary and mostly in a good way. Sex industry is extremely wasteful and inefficient use of our collective time that also often involves a lot of abuse and dark business practices. It’s just somehow taboo to even mention this.

Sometimes you come across a video and you are like ‘oh this. I need more of THIS.’

And then you start tailoring searches to try find more of the same but you keep getting generic or repeated results because the lack of well described/defined content overuse of video TAGs (obviously to try get more views with a large net rather than being specific).

But would i watch AI content if i could feed it a description of what i want? Hell yeah!I mean there are only so many videos of girls giving a blowjob while he eats tacos and watches old Black & White documentaries about the advancements of mechanical production processes.

Not to mention the users that may have a specific interest in some topic/action and basically all types of potential sources are locked bwhind paywalls.

Can confirm with my fetish. Some great artists and live actors who do it, but 90% of the content for it online is bad MS Paint level edits and horrid acting with props. That 10%? God tier, the community showers them in praise and commissions and only stop when they want to, unless a payment service like Visa or Patreon censors them and their livelihood as consenting adults.

If only there was a p2p way to send people funds without anyone knowing the sender or the recipient…

Yes, but will also hurt the very workers’ bottom line, and with some clever workarounds, it’ll be used to fabricate defamatory material other methods were not good at.

I can see it being used to make images of people who don’t know and haven’t consented though and that’s pretty shit

Same with photoshop. I wish people didn’t do bad things with tools also, but we can’t let the worst of us all to define the world we live in.

Or cutting out faces with scissors and glueing them on playboy. Scissors and glue are just tools.

And clearly will be used for CP.

Filters on online platforms are surprisingly effective, though. CivitAI is begging for it, and they’ve kept it out.

Oh my God! That’s disgusting! AI porn online!? Where!? Where do they post those!? There’s so many of them, though. Which one?

Fucking disgusting unethical shit. Tell me where to find it so I can avoid it pls.

Removed by mod

pop is funny

I’m a representative of the American pornographical societies of America and I would like to educate myself and others in this disgusting AI de-generated graphical content for the purpose of informing my friends and family about this. Could we please have a link?

Even Onlyfans girls and boys will lose their jobs because of AI :(

deleted by creator

Definitely don’t click on this link otherwise you might try to install an AI locally

How would one go about installing this? Asking because I don’t want to accidentally install it on my system

I WISH I KNEW

then I could stop people from doing it

So, I definitely didn’t click! But having clicked on GitHub links in the past I can surmise that there’s a step by step install guide and also one for model acquisition. Just be sure not to click the link, and definitely do not follow what I assume is a very well written and easily understood step by step install guide.

This is Lemmy. Why not self host the generation? :)

deleted by creator

Take anything created, ffmpeg it into frames, then run it through this script’s stereoscopic mode:

https://github.com/thygate/stable-diffusion-webui-depthmap-script

Asking the real questions

Tell your friend that my friend is also asking too.

Theoretically? Maybe.

There are already networks to generate depth maps out of 2D video, so hooking up the output to that and a simpler VR video encoder should probably work.

Will it be jank as hell? Oh yeah. Nothing is conveniently packaged in Local AI Land, unfortunately.

this is gonna take rule 34 to a whole another level

It’s really called Wanx?

If you build it, they will porn.

Why you think the net was born? 🎶

deleted by creator

Yeah, but I’m sure it’ll improve in time. I can’t really see what’s the point of AI porn though, unless it’s to make images of someone who doesn’t consent, which is shit. For everything else, regular porn has it covered and looks better too.

Anime/semi-real style is already shockingly good.

It’s pleasing to the eye but lacks the soul and passion of a real, human gooner who just wants to make people cum

Is that JINX?

I dont like that i was able to tell

fucking saw it too 😅

Ye

Yes, here’s the link to the uncensored clip, NSFW obviously, but you’ll need to put in an email to turn off NSFW filtering. https://civitai.com/images/60144368

The censored images in the thumbnail were all pulled from uploaded videos from this Lora on Civati called "Better Titfuck (WAN and HunYuan)"

I didn’t know who that was before looking it up and I totally thought you were taking about the Pokemon lol

*Aliboobie