- cross-posted to:

- programmerhumor@lemmy.ml

- cross-posted to:

- programmerhumor@lemmy.ml

Oh cool.

So I still program like it’s the 1980s?

Makes me feel proficient. XD

Describing what they want in plain, human language is impossible for stakeholders.

‘I want you to make me a Facebook-killer app with agentive AI and blockchains. Why is that so hard for you code monkeys to understand?’

You forgot we run on fritos, tab, and mountain dew.

Maybe he want to write damn login page himself.

Not say it out loud. Not stupid… Just proud.

Even writing an RFC for a mildly complicated feature to mostly describe it takes so many words and communication with stakeholders that it can be a full time job. Imagine an entire app.

You want the answer to the ultimate question of life, the universe, and everything? Ok np

The canonical video which demonstrates that truth.

LLMs need to be trained to work with reptilian language. Problem solved.

You can add SQL in the 70s. It was created to be human readable so business people could write sql queries themselves without programmers.

By that logic could we say the same about compilers.

Or anything above assembly.

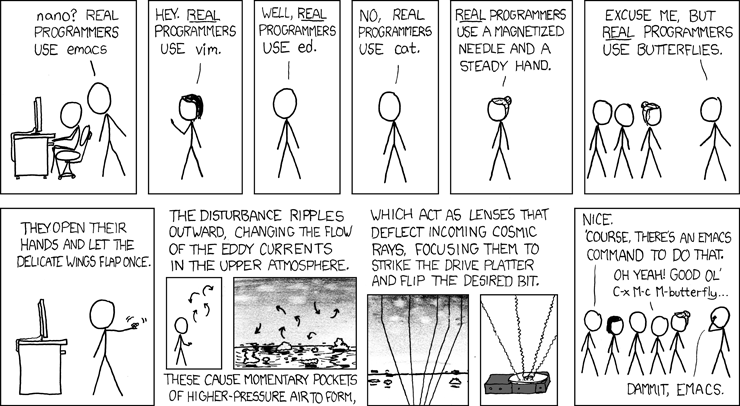

Real programmers use punchcards.

Ironically, one of the universal things I’ve noticed in programmers (myself included) is that newbie coders always go through a phase of thinking “why am I writing SQL? I’ll write a set of classes to write the SQL for me!” resulting in a massively overcomplicated mess that is a hundred times harder to use (and maintain) than a simple SQL statement would be. The most hilarious example of this I ever saw was when I took over a young colleague’s code base and found two classes named “OR.cs” and “AND.cs”. All they did was take a String as a parameter, append " OR " or " AND " to it, and return it as the output. Very forward-thinking, in case the meanings of “OR” and “AND” were ever to change in future versions of SQL.

Object Relational Mapping can be helpful when dealing with larger codebases/complex databases for simply creating a more programmatic way of interacting with your data.

I can’t say it is always worth it, nor does it always make things simpler, but it can help.

I don’t have a lot of experience with projects that use ORMs, but from what I’ve seen it’s usually not worth it. They tend to make developers lazy and create things where every query fetches half the database when they only need one or two columns from a single row.

Yeah. Unless your data model is dead simple, you will end up not only needing to know this additional framework, but also how databases and SQL work to unfuck the inevitable problems.

deleted by creator

the problem with ORM is that some people go all in on it and ignore pure SQL completely.

In reality ORM only works well for somewhat simple queries and structures, but at some times you will have to write your own queries in SQL. But then you have some bonus complexity, that comes from 2 different things filling the same niche. It’s still worth it, but there is no free cake.

I’ve always seen as that as a scapehatch for one of the most typical issues with ORMs, like the the N+1 problem, but I never fully bought it as a real solution.

Mainly because in large projects this gets abused (turns out none or little of the SQL has a companion test) and one of the most oversold benefits of ORMs (the possibility of “easily” refactor the model) goes away.

Since SQL is code and should be tested like any other code, I rather ditch the whole ORM thing and go SQL from the beginning. It may be annoying for simple queries but induces better habits.

I used to use ORMs because they made switching between local dev DBs ( like SQLLite, or Postgres) and production DBs usually painless. Especially for Ruby/Sinatra/Rails since we were writing the model queries in another abstraction. It meant we didn’t have to think as much about joins and all that stuff. Until the performance went to shit and you had to work out why.

My standard for an orm is that if it’s doing something wrong or I need to do something special that it’s trivial to move it aside and either use plain SQL or it’s SQL generator myself.

In production code, plain SQL strings are a concern for me since they’re subject to the whole array of human errors and vulnerabilities.

Something like

stmt = select(users).where(users.c.name == 'somename')is basically as flexible as the string, but it’s not going to forget a quote or neglect to use SQL escaping or parametrize the query.And sometimes you just need it to get out of the way because your query is reaaaaaal weird, although at that point a view you wrap with the orm might be better.

If you’ve done things right though, most of the time you’ll be doing simple primary key lookups and joins with a few filters at most.

I did that myself back in the day. Not overly complicated, but a SQL builder.

I think it’s because SQL is sort-of awkward. For basic uses you can take a SQL query string and substitute some parameters in that string. But, that one query isn’t going to cover all your use cases. So, then you have at least 2 queries which are fairly similar but not similar enough that it makes sense just to do string substitutions. Two strings that are fairly similar but distinct suggests that you should refactor it. But, maybe you only make a very simple query builder. Then you have 5 queries and your query builder doesn’t quite cover the latest version, so you refactor it again.

But, instead of creating a whole query builder, it’s often better to have a lot of SQL repetition in the codebase. It looks ugly, but it’s probably much more maintainable.

What part of this is irony?

So is COBOL.

(Is there any sane alternative to SQL?)

(Is there any sane alternative to SQL?)

Yes, no SQL.

I lol’d

AWK?

(Is there any sane alternative to SQL?)

Well there is our lord and savior, or so I’m told by many MBA(s), No SQL

Oh wait you said Sane. Nevermind.

Least it’s an improvement over no/low code. You can dig in and unfuck some ai code easily enough but god help you if your no code platform has a bug that only their support team can fix. Not to mention the vendor lock in and licensing costs that come with it.

Doesn’t matter if they can replace coders. If CEOs think it can, it will.

And now, it’s good enough to look like it works so the CEO can just push the problem down the road and get an instant stock inflation

And then it’ll all go to shit and proper programmers will be able to charge bank to sort it out.

I don’t want so spend my career un-fucking vibe code.

I want to create something fun and nice. If I wanted to clean other people’s mess, I would be a janitor.

If I wanted to clean other people’s mess, I would be a janitor.

I’ll take your share of the slop cleanup if you don’t want it. I wouldn’t mind twice the slop cleanup

extortionsalary.Cleaning other people’s mess is okay for a while. But making a career out of it is too much for me.

I do firmware for embedded systems and every mechanical, electronics or general engineering issue is shoved down in my court because it’s easier for people to take shortcuts in the engineering process and say “we’ll fix it in the firmware” since I can change code 100 times a day.

Slop is the embodiment of that on steroids and it will get old pretty fast.

Cleaning other people’s mess is okay for a while. But making a career out of it is too much for me.

Oh yes. I feel that, too. I’ll give them maybe a year of help, for the right price. Two years if the price is very right. Haha.

Slop is the embodiment of that on steroids and it will get old pretty fast.

So true.

I hope it works like that.

I hope all those companies go bankrupt, people hiring those CEOs lose everything, and the CEOs never manage to find another job in their lives…

But that’s a not bad second option.

The CEOs will get a short term boost to profits and stock price. Theyll get a massive bonus from it. Then in a few years when shit starts blowing up, they will retire before that happens with a nice compensation package, leaving the company, employeez, and stockholders up shits creek from his short sighted plan.

But the CEO will be just fine on his yacht, dont worry.

C-Suite execs are probably the one thing LLMs could actually replace and save the company more money than layoffs, but it’ll never happen.

Companies aren’t democracies. They are monarchies with the illusion of democracy via shareholders.

It already does, there are people selling their services to unfuck projects that were built with generated code.

Hell yeah. Those people are smart. I hope they get super rich on fixing AI nonsense.

They’ll end up being exploited

What do you think happens already lmao

What’s your point?

I don’t think this will lead to any additional exploitation as that’s already the general business model.

That’s the point I was making

It never actually seems to work out that way though. Sure, for Y2K there was a short period where there were decent contracts fixing that bug in various codebases, but it wasn’t something that lasted very long.

Managers and owners would much rather pass off a terrible PoS and have their users deal with it, or somehow get the government to bail them out, or hire a bunch of Uyghur programmers from a Chinese labour camp, or figure out some other way to avoid having to pay programmers / software engineers what they’re actually worth.

LLMs often fail at the simplest tasks. Just this week I had it fail multiple times where the solution ended up being incredibly simple and yet it couldn’t figure it out. LLMs also seem to „think“ any problem can be solved with more code, thereby making the project much harder to maintain.

LLMs won’t replace programmers anytime soon but I can see sketchy companies taking programming projects by scamming their clients through selling them work generated by LLMs. I‘ve heard multiple accounts of this already happening and similar things happened with no code solutions before.

Today I removed some functions and moved some code to separate services and being the lazy guy I am, I told it to update the tests so they no longer fail. The idiot pretty much undid my changes and updated the code to something very much resembling the original version which I was refactoring. And the fucker did it twice, even with explicit instructions to not do it.

I have heard of agents deleting tests or rewriting them to be useless like ‘assert(true)’.

Your anecdote is not helpful without seeing the inputs, prompts and outputs. What you’re describing sounds like not using the correct model, providing good context or tools with a reasoning model that can intelligently populate context for you.

My own anecdotes:

In two years we have gone from copy/pasting 50-100 line patches out of ChatGPT, to having agent enabled IDEs help me greenfield full stack projects, or maintain existing ones.

Our product delivery has been accelerated while delivering the same quality standards verified by our internal best practices we’ve our codified with determistic checks in CI pipelines.

The power come from planning correctly. We’re in the realm of context engineering now, and learning to leverage the right models with the right tools in the right workflow.

Most novice users have the misconception that you can tell it to “bake a cake” and get the cake ypu had in your mind. The reality is that baking a cake can be broken down into a recipe with steps that can be validated. You as the human-in-the-loop can guide it to bake your vision, or design your agent in such a way that it can infer more information about the cake you desire.

I don’t place a power drill on the table and say “build a shelf,” expecting it to happen, but marketing of AI has people believing they can.

Instead, you give an intern a power drill with a step-by-step plan with all the components and on-the-job training available on demand.

If you’re already good at the SDLC, you are rewarded. Some programmers aren’t good a project management, and will find this transition difficult.

You won’t lose your job to AI, but you will lose your job to the human using AI correctly. This isn’t speculation either, we’re also seeing workforce reduction supplemented by Senior Developers leveraging AI.

This entire comment reads like lightly edited ai slop.

Well, I typed it with my fingers.

Explicit programmers are needed because the general public has failed to learn programming. Hiding the complexity behind nice interfaces makes it actually more difficult to understand programming.

This comes all from programmers using programs to abstract programming away.

What if the 2030s change the approach and use AI to teach everybody how to program?

I’m afraid I cant let you do that, Dave.

You’ve not read the manual.

Hiding the complexity behind nice interfaces makes it actually more difficult to understand programming.

This is a very important point, that most of my colleagues with OOP background seem to miss. They build a bunch of abstractions and then say it’s easy, because we have one liner in calling code, pretending that the rest of the code doesn’t exist. Oh yes, it certainly exists! And needs to be maintained, too.

I find this to be a real problem with visual shaders. I know how certain mathematical formulas affect an input, but instead of just pressing the Enter key and writing it down, I now have to move blocks around, and oh no, they were nicely logically aligned, now one block is covering another block, oh noo, what a mess and the auto sort thing messes up the logical sorting completly… well too bad.

And I find that most solutions on the internet utilizing the visual editor tend to forget that previous outputs can be reused. Getting normals from already generated noise without resampling somehow becomes arcane knowledge.

Edit: words.

the general public has failed to learn programming

That’s like saying that the general public has failed to learn surgery, or the general public has failed to learn chemical engineering.

There are certain things that it just doesn’t make sense for the general public to ever be expected to learn.

People bake and learn basic chemistry. The baseline of general programming knowledge could be more than zero. It’s a fundamental part of our society.

Baking is not chemical engineering. Chemical engineering doesn’t even have much to do with chemistry. It’s mostly about temperatures and flow rates, pressure, etc.

Saying “the baseline of programming knowledge could be more than zero” is meaningless. The baseline of chemical engineering knowledge could also be more than zero. It’s also a fundamental part of our society. But, the average person doesn’t need to know how to program, just like the average person doesn’t need to know how to design a refinery.

People do learn some basic computer skills. They should learn more. They should know about files. They should know how to back up their data. And, more importantly, they should learn how to restore data from a backup after something goes wrong. They should know how to properly update their devices, how to tell if their devices are infected, and the basics of managing a home network. They sometimes learn how to do basic functions in excel spreadsheets. That’s about as far as they do, or should need to go in programming / IT. Beyond that, why should the average person need to know how to do recursion, or how loops work?

It gives them the opportunity to create their own tools.

Sure, and learning surgery could let them do their own operations.

If you look at “I didn’t have eggs” you’ll quickly figure out that very few people are learning chemistry from baking/cooking.

I memorized by rote the chord progressions in my favorite style of music. This does not mean I understand music theory at all.

I I I I IV IV I I V IV I IV

You nailed it ok.

Worse than that, I don’t even really know how they relate to each other, I just know “key of C” means C, F, G. I actually even went so far as to write each major key progression down with my cheater chord pics.

So, I can tell you what I know from a bassist’s PoV.

What I posted was the 12 bar blues chord progression in Roman Numeral notation. What it tells you is that if you start in the key of C, the other bars are 4 and 5 notes up from C. In addition, since the notation is in uppercase, the chords / arpeggios you can play in that bar are major not minor. So, if a bassist is playing a walking bass line for 12 bar blues, they’ll probably start those bars with C, F and G. But, since they’re C major, F major and G major, the bassist can play major arpeggios in that key in those bars and it will sound good.

For other kinds of blues progressions, if you know Radiohead’s “Creep”, you can see that as being an 8 bar blues with the following progression:

1 2 3 4 I III IV iv I vi ii V7 So if the root is C, the 2nd bar is E major, third bar is F major, 4th bar is F minor, and so on. Because the 3rd and 4th bars are both rooted at F the bassist can just play an F there and it sounds good (which is what I think Radiohead’s bassist does), but if the bassist chooses to play more notes in an apeggio, they have to play notes from the F-minor scale in that 4th bar or it doesn’t match.

As for why those various chord progressions happen to work, that I don’t know. I don’t know if anybody does. But, I do know there’s some math / physics behind it. A perfect fifth is one of the most pleasant sounding intervals, and those notes are at a frequency ratio of 2:3. The only better sounding thing is an octave at 1:2. And, the inverse of a perfect fifth is a perfect fourth. So, songs being made from 4ths, 5ths and octaves makes sense.

This is a lovely explaination, but circling back to the reason we’re even discussing it. I don’t want to play cool things. I want to strum my ukulele with a bunch of other people and feel like I’m part of something but not work too hard. I’ve memorized my favorite/most comfortable chord fingerings, and the most common progressions and that’s all I do.

I bake in the same way. I have a few recipes I like. The most deviation I do is halving or doubling. But the recipe is good so the food turns out fine.

There’s plenty of other stuff I’m a nerd about and I get deep into the weeds of tiny details with other people who care deeply.

What if the 2030s change the approach and use AI to teach everybody how to program?

What does AI (already known to be an unreliable bullshitting machine) provide to students that existing tutorials, videos and teachers do not already?

Also the companies investing in AI are not trying to teach their workers to be better, they’re trying to make more profit by replacing workers or artificially increasing their outputs. Teaching people to program is not what they care about

When growing up in the 70’s “computer programmers” were assumed to be geniuses. Nowadays they are maybe one tier above fast food workers. What a world!

Nowadays they are maybe one tier above fast food workers.

:-/

Having worked both jobs, I could point to a few differences

Yeah fast food is a lot more stressful.

Every single job in my entire life I have made more money, and my workload has gotten easier. I am grateful everyday I escaped the trap. Very few do.

Food is essential, the new shiny way to gobble more RAM to display a blue mushroom in a button isn’t.

Well to be fair if you’re a programmer in the 70s you might as well be a genius.

That environment was wild though. At the time, you basically needed to be an electrical engineer and/or a licensed HAM operator, just to have your head wrapped around how it all worked. Familiarity with the very electronics of the thing, even modifying the hardware directly when needed, was crucial to operating that old tech.

The most consistent and highest paying jobs I’ve had are replacing or fixing legacy and garbage systems. I don’t think the current gen llm’s are anywhere close to being able to do those jobs, and is in fact causing those jobs to have more work the more insecure, inefficient trash they generate.

Give it a few months.

Fellow tech-trash-disposal-engineer here. I’ve made a killing on replacing corporate anti-patterns. My career features such hits and old-time classics like:

- email as workflow

- email as version control

- email as project management

- email as literally anything other than email

- excel as an relational database

- excel as project management

- help, our wiki is out of control

- U-drive as a multi-user collaboration solution

- The CEO’s nephew wrote this 8 years ago and we can’t get rid of it

In all of these cases, there were always better answers that maybe just cost a little bit more. AI will absolutely cause some players to train-wreck their business, all to save a buck, and we’ll all be there to help clean up. Count on it.

excel as an relational database

That reminds me of a story. I used to do IT consulting, years ago. One client was running their 5 person real estate office off a low quality, consumer grade, box store HP desktop repurposed as a server. All collaboration was through their U drive, plus every profile had their desktop folder redirected there.

The complaint was the classic “everything is slow”, which turned out to be “opening my spreadsheet takes 10 minutes then it’s slow”. Yeah, because that poor little “server” had a single 100 Mb jack and the owner had a 1.5 GB excel spreadsheet project where he was trying to build a relational database and property valuation tool. Six fucking heavily cross referenced tabs, some with thousands of entries. He was so proud when I asked him to explain what was going on there. He fired me when I couldn’t fix his issue without massive changes to either his excel abomination or hardware.

Hey, kudos for finding multiple anti-patterns all in one place like that. I didn’t even think about “underpowered desktop as company server” as another pattern, but here we are.

Sorry you didn’t get the contract, but that sounds like a blessing in disguise to be honest.

It depends on the methodology. If you’re trying to do a direct port. You’re probably approaching it wrong.

What matters to the business most is data, your business objects and business logic make the business money.

If you focus on those parts and port portions at a time, you can substantially lower your tech debt and improve developer experiences, by generating greenfield code which you can verify, that follows modern best practices for your organization.

One of the main reasons many users are complaining about quality of code edited my agents comes down to the current naive tooling. Most using sloppy find/replace techniques with regex and user tools. As AI tooling improves, we are seeing agents given more IDE-like tools with intimate knowledge of your codebase using things like code indexing and ASTs. Look into Serena, for example.

Well, have you seen what game engines have done to us?

When tools become more accessible, it mostly results in more garbage.

I’m guessing 4 out of 5 of your favorite games have been made with either unity or unreal. What an absolutely shit take.

The fact that they have favorite games using these engines has absolutely nothing to do with there being massive amounts of garbage games. The shit take is yours.

He’s framing it as if the overall effect is bad. Game engines have been a serious boon to the industry. The good massively outweighs the bad.

The good massively outweighs the bad.

That’s your opinion.

I know I have stopped purchasing as many games because the average quality has tanked.

59% of games sold on steam either use unity or unreal. Not released, but sold. I think it’s the general opinion and you’re an outlier here. I invite you to post lists of your recently bought games or most played games. I doubt they all have their own custom engine.

Only 10% of new games used a custom engine, and they are by far mostly triple A games from big companies. Unless you just don’t play indie games, you have bought unreal or unity games even if you buy less in general.

And yes, that means 10% account for 40% of sales but it’s no suprise since having your own engine and a big budget go hand in hand.

It’s absolutely insane to actually think we are worse off. Would you rather a walled garden, where only multi million dollar companies can afford to develop games, just because of a few shovelware garbage titles that just get ignored anyways? Do you get a shit game as a gift every Christmas or something? The negatives aren’t even affecting anything.

Here are the stats if you are interested. https://www.creativebloq.com/3d/video-game-design/unreal-engine-dominates-as-the-most-successful-game-engine-data-reveals

Cool story.

Try and build your own engine bro. Making a game is seriously hard, even when unity does 4/5th of the work. Having less options wouldn’t somehow lead to better quality, it would make the whole scene shit. You are simply speaking from a place of ignorance. “Cool story”, lol.

You’re guess is wrong. :P And anyways, I didn’t say all games using an easy to use game engine are shit.

If you use an easy game engine (idk if unreal would even fit this, btw), it is easier to produce something usable at all. Meanwhile, the effort needed to make the game good (i.e. game design) stays the same. The result is that games reach a state of being publishable with a lower amount of effort spent in development.

deleted by creator

I’m still waiting to be replaced by robots and computers.

My company recently acquired another firm that tried to outsource the entire IT department and proceeded to shit itself to death.

Go ahead cowards. Replace me with a computer. I will become more powerful than you could ever imagine.

Feeling that. My company is off shoring and out sourcing a lot of stuff now. It’s a nightmare. But profits are up. So hey, who cares if the software is held together with hopes and dreams. And our hosted services admins don’t have a clue.

This is why I half ass things with AI. Mgmt clearly doesn’t care.

After shovels were invented, we decided to dig more holes.

After hammers were invented, we needed to drive more nails.

Now that vibe coding has been invented, we are going to write more software.

No shit

If ChatGPT’s browser is just another chromium clone and they couldn’t get their own AI to write a browser, I doubt other customers of theirs will get their shitbot to write code for them either.

Literally nothing of consequence has been built with visual, mda or no-code paradigms.

Well let me tell you, the things built in the vibe-cpdong paradigm will have consequences

You’re D:\ has been deleted. I’m very sorry.

Well, not mine, I can tell you that. And I’m not about to give an AI write permissions for /mnt

And after each of these, there’s been _more _ demand for developers.